Getting started with Apache Spark using .NET Core

Since a few months, I’ve started to focus my attention on the Data / Big data technologies both for work and individual reasons.

The following post covers what I have learned about Apache Spark core, and its architecture. Above all, it introduces the .NET Core library for Apache Spark, which aims to bring the Apache Spark tools into the .NET ecosystem. I’ve already written about .NET in the data a few months ago: Data analysis using F# and Jupyter Notebook.

This post covers the following topics:

- Intro to Apache Spark;

- Apache Spark architecture: RDD, Dataframes, drive-workers way of working;

- Querying using SparkSQL using the .NET Core lib;

- Considerations around the use of .NET with Apache Spark;

Intro to Apache Spark

Apache Spark is a framework to process data in a distributed way. After 2014, it is considered the successor of Hadoop to handle and manipulate Big Data. Besides, the Spark success can be attributed not only to its performance but also to the rich and always growing ecosystem that support and contribute to the evolution of this technology. Moreover, the Apache Spark APIs are readable, testable and easy to understand.

Nowadays, Apache Spark and its libraries provide an ecosystem that can support a team or a company in data analysis, streaming analysis, machine learning and finally graph processing.

Apache Spark provides a wide set of modules:

- Spark SQL for data analysis over relational data;

- Spark Streaming for the streaming of data;

- MLlib for the machine learning;

- GraphX for distributed graph processing;

All these modules and libraries stand on top of the Apache Spark Core API.

Introduction to Spark for .NET Core

.NET Core is the multi-purpose, open-source and cross-platform framework built by Microsoft.

Microsoft is investing a lot on .NET Core ecosystem. Further, the .NET team is bringing .NET technologies into the data world. Furthermore, the last year, Microsoft released a machine learning framework for .NET Core, available on Github, and recently, they shipped the APIs for Apache Spark also available on Github.

For this reason, I directed my attention on Apache Spark and its structure.

Setup the project

Let’s see how to manage Apache Spark using .NET Core framework.

The example described in this post uses the following code available on GitHub and the Seattle Cultural Space Inventory dataset available on Kaggle. Moreover, the project is a simple console template created by using the following .NET Core command:

dotnet new console -n blog.apachesparkgettingstarted

The command mentioned above creates a new .NET Core console application project. In addition, we should also add the Apache Spark APIs, by executing the following CLI command in the root of the project:

dotnet add package Microsoft.Spark

The instruction adds the Apache Spark for .NET to the current project.

Apache Spark core architecture

As said earlier, Apache Spark Core APIs are the foundation of the additional modules and features provided in the framework. The following section will cover some of the base concepts of the Spark architecture.

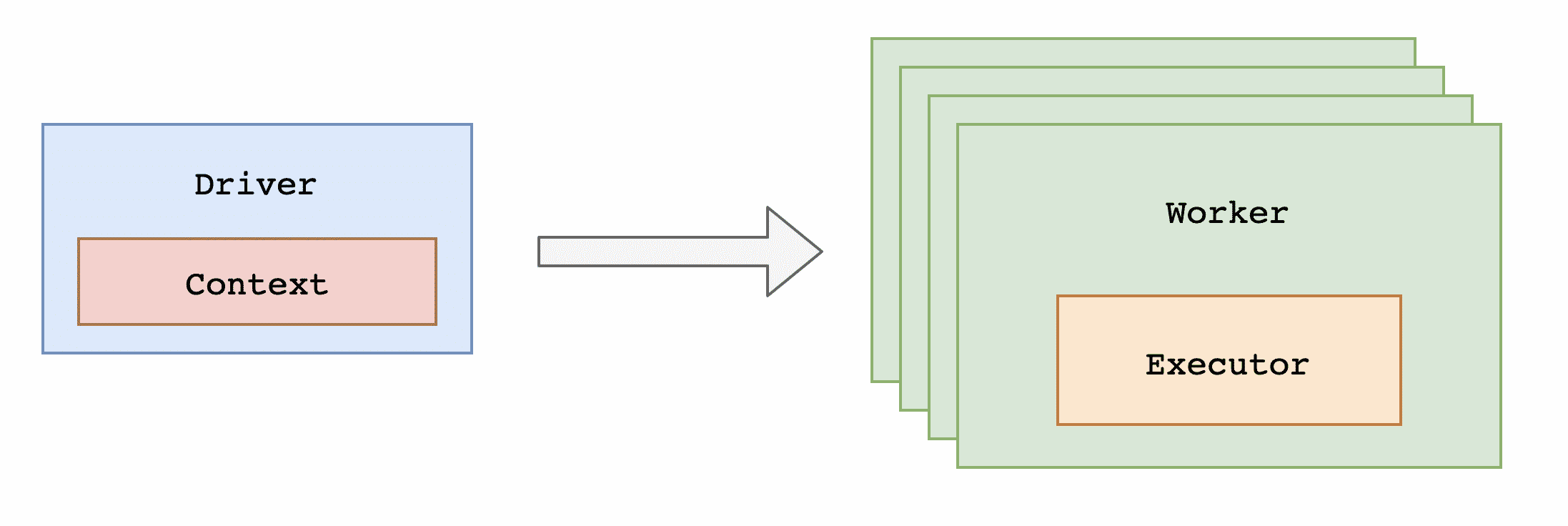

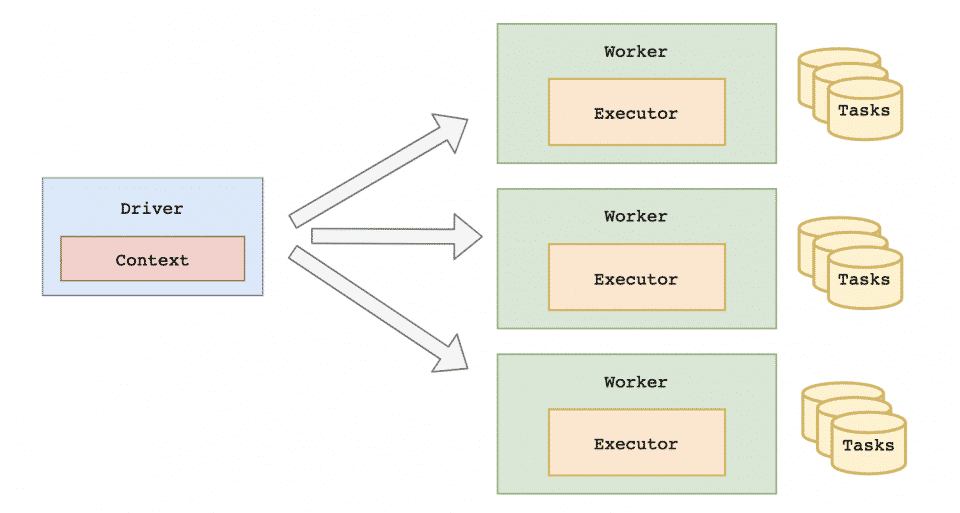

First of all, let’s take an overview of the Apache Spark driver-workers concept, the following schema describes the mechanics behind Spark:

Every Spark application is usually composed by the Driver and a set of Workers. The Driver is the coordinator entity of the system, and it distributes the chuck of work across different Workers. Apache Spark 2.0.0 onwards, SparkSession type provides the single entry point to interact with underlying Spark functionality. Moreover, SparkSession type has the following responsibilities:

- Create the tasks to assign to the different workers;

- Locate and load the data used by the application;

- handle eventual failures;

This is an example of a C# code that initializes a SparkSession:

The code mentioned above gets or create a new SparkSession instance with the name “My application”. The retrieved SparkSession instance provides all the necessary APIs.

RDDs, DataFrames core concept

Apache Sparks supports developers with a great set of API and collection objects. The following section will describe the two main set of APIs provided by Spark: RDD and Dataframes;

The RDD is the foundations for the Dataframes collection. RDD stands for Resilient Distributed Dataset (RDD), it is the basic abstraction in Spark. RDD represents an immutable, partitioned collection of elements that can be operated on in parallel. RDDs are designed as resilient and distributed. Therefore they distribute the work across multiple nodes and partitions, and they are able to handle failure by re-calculating the partition that failed.

The Dataframes are built on top RDD. Dataframes organizes the collections into named columns, which provides an higher-level of abstractions.

The subsequent example shows the definition of a Dataframes:

The code mentioned above, read data from the following Seattle Cultural Space Inventory dataset. The snippet loads the data into the Dataframe by automatically infer the columns. After that, the Dataframe collection provides a rich set of APIs which can be used to apply operations on the data, for example:

The preceding snippet combines the Select and Filter operations by selecting the Name and the Phone columns from the Dataframe, and by filtering out all the rows without the Phone column populated. Both the Select and Filter methods are part of the transformation APIs of Apache Spark.

Transformation and Action APIs

For instance, we can group the RDDs and Dataframes APIs into two groups:

- Transformation APIs are all the functions that manipulate the data. All the APIs such as

Select,Filter,GroupBy,Map. Furthermore, all the functions that return another RDD or Dataframe are part of the transformation APIs; - Action APIs are all the method that performs an action on data. They usually return a void result;

Moreover, we should notice that all the transformations APIs uses a lazy-evaluation approach. Hence, all the transformations on data are not immediately executed. In other words, the Apache Spark engine stores the set of operations in a DAG (Directed acyclic graph) and rebuild them in a lazy way.

The schema mentioned above describes the distributed data transformation approach used by Apache Spark. Let’s suppose that our code executes an action on the Dataframe collection, such as the Show() method:

As you can see from the code mentioned above, the Show() doesn’t return anything, which means that, unlike the Select() and Filter() methods, it is an action that effectively triggers the execution of the DataFrame evaluation.

The Show() method simply displays rows of the DataFrame data in tabular form.

Apache Spark execution and tooling

Let’s proceed by executing the snippet of code we implemented in the previous section. Apache Spark provides an out-of-box called spark-submit tool command, in order to submit and to execute the code.

Besides, it is possible to submit our .NET Core code to Apache Spark using the following command:

spark-submit --class org.apache.spark.deploy.dotnet.DotnetRunner --master local microsoft-spark-2.4.x-0.4.0.jar dotnet <compiled_dll_filename>

The above command uses the spark-submit tool to send and execute the dll which is provided as parameter:

As you can see, the result of the executions expose the first 20 populated numbers.

Spark.NET under the hood

You may have noticed, that every time you add the Microsoft.Spark package, it also brings the following jar packages: microsoft-spark-2.4.x-0.4.0.jar and microsoft-spark-2.3.x-0.4.0.jar. Both of them are used to communicate with the native Scala APIs of Apache Spark. Furthermore, if we take a closer look at the source code, available on GitHub, we can understand the purposes of these two packages:

The DotnetBackend Scala class behave as an interpreter between the .NET Core APIs and the native Scala APIs of Apache Spark. More in detail, this approach is taken also by other non-native languages that use Spark, such as R language.

The .NET team and community are already pushing for the native adoption of .NET binding as part of Apache Spark: https://issues.apache.org/jira/browse/SPARK-27006. Moreover, it seems that they are making some progress:

Thank you for reading this far and we look forward to seeing you at the SF Spark Summit in April where we will be presenting our early progress on enabling .NET bindings for Apache Spark.

Final thoughts

The following article gives a quick introduction to Apache Spark, and a quick Getting started with Apache Spark using .NET Core. Microsoft is investing a lot in .NET Core, and most important, is investing in opensource. Languages like Python, Scala, and R are definitely more established in the Data world, However, the implementation of the Spark library for and the ML.NET framework are also the facts that Microsoft is investing a lot to bring .NET into the data world.

Cover image by Tube mapper.